In the world of software development and data management, JSON (JavaScript Object Notation) has become a popular choice for structuring and exchanging data due to its simplicity and flexibility. JSON provides a convenient way to represent complex data structures that can be easily understood and processed by both humans and machines. However, working with large JSON datasets can sometimes pose challenges, especially when it comes to ensuring data integrity and validation. That’s where GPT (Generative Pre-trained Transformer) comes into play, offering a powerful solution to derive a JSON-schema from JSON-content.

Understanding JSON-Schema

Before delving into the magic of GPT, let’s briefly discuss JSON-Schema. JSON-Schema is a vocabulary that allows you to annotate and validate JSON documents. It provides a formal definition of the expected structure, data types, and constraints for a given JSON dataset. By defining a JSON-Schema, you can ensure that the data adheres to specific rules and requirements, enabling robust validation and efficient data processing.

Leveraging GPT for JSON-Schema Derivation

GPT, powered by its advanced natural language processing capabilities, can be utilized to derive a JSON-Schema from existing JSON content. No training is necessary as GPT (in this case version 3.5) comes with everything you need. All you need is an OpenAI Api Access and a suitable prompt like the following, giving GPT the instruction to behave like a python function with input and return value:

"role": "system", "content": "You are a python function to analyze JSON string input to generate a JSON schema from it as a JSON string result""role": "user", "content": "Extract the JSON schema of the following JSON content and return it as a JSON string. Treat NULL-Values as optional: {JSON CONTENT}"In our case we are using the follwing json example file to determine the appropriate schema:

{

"articles": [

{

"url": "https://www.britannica.com:443/technology/artificial-intelligence",

"url_mobile": null,

"title": "Artificial intelligence ( AI ) | Definition , Examples , Types , Applications , Companies , & Facts",

"seendate": "20230612T054500Z",

"socialimage": "https://cdn.britannica.com/81/191581-050-8C0A8CD3/Alan-Turing.jpg",

"domain": "britannica.com",

"language": "English",

"sourcecountry": "United States",

"id": 1

},

{

"url": "https://www.drive.com.au/news/jeep-says-artificial-intelligence-is-heading-off-road/",

"url_mobile": null,

"title": "Jeep says Artificial Intelligence is heading off - road",

"seendate": "20230611T203000Z",

"socialimage": "https://images.drive.com.au/driveau/image/upload/c_fill,f_auto,g_auto,h_675,q_auto:good,w_1200/cms/uploads/mm6gqcquwz10snybg79k",

"domain": "drive.com.au",

"language": "English",

"sourcecountry": "Australia",

"id": 2

},

{

"url": "https://labusinessjournal.com/featured/artificial-intelligence-on-call/",

"url_mobile": null,

"title": "Artificial intelligence on Call - Los Angeles Business Journal",

"seendate": "20230612T080000Z",

"socialimage": "https://images.labusinessjournal.com/wp-content/uploads/2023/06/SR_COVEROPTION_Pearl-Second-Opinion-dentist-with-patietn-point-at-caries-HiRes-copy.jpg",

"domain": "labusinessjournal.com",

"language": "English",

"sourcecountry": "United States",

"id": 3

},

{

"url": "https://www.emirates247.com/uae/artificial-intelligence-office-organises-ai-enabled-entrepreneurs-conference-in-collaboration-with-nvidia-2023-06-11-1.712994",

"url_mobile": "https://www.emirates247.com/uae/artificial-intelligence-office-organises-ai-enabled-entrepreneurs-conference-in-collaboration-with-nvidia-2023-06-11-1.712994?ot=ot.AMPPageLayout",

"title": "Artificial Intelligence Office organises AI - Enabled Entrepreneurs conference in collaboration with NVIDIA",

"seendate": "20230611T194500Z",

"socialimage": "https://www.emirates247.com/polopoly_fs/1.712995.1686498090!/image/image.jpg",

"domain": "emirates247.com",

"language": "English",

"sourcecountry": "United Arab Emirates",

"id": 4

},

{

"url": "https://www.business-standard.com/india-news/ai-offers-new-opportunities-also-brings-threats-to-privacy-amitabh-kant-123061200521_1.html",

"url_mobile": "https://www.business-standard.com/amp/india-news/ai-offers-new-opportunities-also-brings-threats-to-privacy-amitabh-kant-123061200521_1.html",

"title": "AI offers new opportunities , also brings threats to privacy : Amitabh Kant",

"seendate": "20230612T120000Z",

"socialimage": "https://bsmedia.business-standard.com/_media/bs/img/article/2023-02/14/full/1676346078-2487.jpg",

"domain": "business-standard.com",

"language": "English",

"sourcecountry": "India",

"id": 5

},

{

"url": "https://www.jamaicaobserver.com/latest-news/tufton-says-pnp-using-ai-to-spread-misinformation-threaten-democracy/",

"url_mobile": "https://www.jamaicaobserver.com/latest-news/tufton-says-pnp-using-ai-to-spread-misinformation-threaten-democracy/amp/",

"title": "Tufton says PNP using AI to spread misinformation , threaten democracy",

"seendate": "20230612T010000Z",

"socialimage": "https://imengine.public.prod.jam.navigacloud.com/?uuid=49085626-2689-402B-BA2C-7224FE707AF4&function=fit&type=preview",

"domain": "jamaicaobserver.com",

"language": "English",

"sourcecountry": "Jamaica",

"id": 6

},

{

"url": "https://www.foxnews.com/world/chatgpt-delivers-sermon-packed-german-church-tells-congregants-fear-death",

"url_mobile": "https://www.foxnews.com/world/chatgpt-delivers-sermon-packed-german-church-tells-congregants-fear-death.amp",

"title": "ChatGPT delivers sermon to packed German church , tells congregants not to fear death",

"seendate": "20230611T151500Z",

"socialimage": "https://static.foxnews.com/foxnews.com/content/uploads/2023/06/Fuerth3.jpg",

"domain": "foxnews.com",

"language": "English",

"sourcecountry": "United States",

"id": 7

},

{

"url": "https://www.standardmedia.co.ke/opinion/article/2001474967/lets-embrace-ai-for-better-efficient-future-of-work",

"url_mobile": "https://www.standardmedia.co.ke/amp/opinion/article/2001474967/lets-embrace-ai-for-better-efficient-future-of-work",

"title": "Let embrace AI for better , efficient future of work",

"seendate": "20230611T143000Z",

"socialimage": "https://cdn.standardmedia.co.ke/images/articles/thumbnails/fnIyghHfNlwyrVSTk7HEFSkG6Mb3IkndZiu2Yg6v.jpg",

"domain": "standardmedia.co.ke",

"language": "English",

"sourcecountry": "Kenya",

"id": 8

},

{

"url": "https://techxplore.com/news/2023-06-chatbot-good-sermon-hundreds-church.html",

"url_mobile": "https://techxplore.com/news/2023-06-chatbot-good-sermon-hundreds-church.amp",

"title": "Can a chatbot preach a good sermon ? Hundreds attend church service generated by ChatGPT to find out",

"seendate": "20230611T140000Z",

"socialimage": "https://scx2.b-cdn.net/gfx/news/hires/2023/can-a-chatbot-preach-a.jpg",

"domain": "techxplore.com",

"language": "English",

"sourcecountry": "United States",

"id": 9

},

{

"url": "https://technology.inquirer.net/125039/openai-ceo-asks-china-to-help-create-ai-rules",

"url_mobile": "https://technology.inquirer.net/125039/openai-ceo-asks-china-to-help-create-ai-rules/amp",

"title": "OpenAI CEO Asks China For AI Rule Making | Inquirer Technology",

"seendate": "20230612T074500Z",

"socialimage": "https://technology.inquirer.net/files/2023/06/pexels-andrew-neel-15863000-620x349.jpg",

"domain": "technology.inquirer.net",

"language": "English",

"sourcecountry": "Philippines",

"id": 10

}

]

}

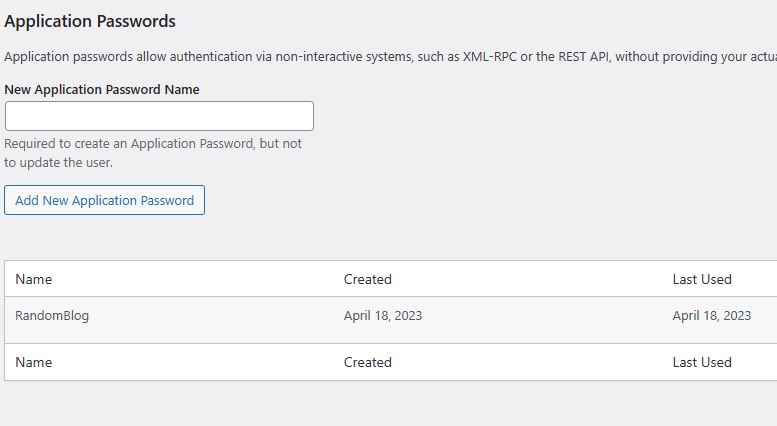

In the next steps we implement some short python scripts to read the json file and make an OpenAI api request. First, we are using the following wrapper for the api call:

import os

import openai

class OpenAIWrapper:

def __init__(self, temperature):

self.key = os.environ["OPENAI_API_KEY"]

self.chat_model_id = "gpt-3.5-turbo-0613"

self.temperature = temperature

self.max_tokens = 2048

self.top_p = 1

self.time_out = 7

def run(self, prompt):

return self._post_request_chat(prompt)

def _post_request_chat(self, messages):

try:

openai.api_key = self.key

response = openai.ChatCompletion.create(

model=self.chat_model_id,

messages=messages,

temperature=self.temperature,

max_tokens=self.max_tokens,

frequency_penalty=0,

presence_penalty=0

)

res = response['choices'][0]['message']['content']

return res, True

except Exception as e:

return "", False

One thing you have care about is the maximum size “max_tokens” of your input. Currently the GPT 3.5 model can handle up to 16k tokens – our example consists of about 2k tokens. So you may need to abbreviate the input to fit the maximum size.

The second step is to encapsulate our prompts into a python function with a given json_content parameter returning the output of the GPT call:

from OpenAIWrapper import OpenAIWrapper

JSON_SCHEMA_LLM_PREFIX = "Extract the JSON schema of the following JSON content and return it as a JSON string. Treat NULL-Values as optional:"

JSON_SCHEMA_LLM_SUFFIX = "Remember: The reply must only be JSON."

class JsonSchemaLLM:

def __init__(self, temperature) -> None:

self.LLM = OpenAIWrapper(temperature)

self.messages = [{"role": "system", "content": "You are a python function to analyze JSON string input to generate a JSON schema from it as a JSON string result"}]

def get_json_schema(self, json_content):

'''

- input: the json content to dervive a json schema

- output: json schema

'''

messages = self.messages + [{'role': 'user', "content": JSON_SCHEMA_LLM_PREFIX+'\n'+json_content+'\n'+JSON_SCHEMA_LLM_SUFFIX}]

response, status = self.LLM.run(messages)

if status:

return response

else:

return "OpenAI API error."

Please note that we are using a suffix with a reminder for GPT. This is especially important for larger prompts to ensure that the output is json only without any descriptions.

Finally we can read the example json file and test our code to see if it works:

from JsonSchemaLLM import JsonSchemaLLM

model = JsonSchemaLLM(0)

with open("JsonExample.json", "r") as f:

code = f.read()

response = model.get_json_schema(code)

print(response)

This is the output of our new python ai function:

{

"$schema": "http://json-schema.org/draft-07/schema#",

"type": "object",

"properties": {

"articles": {

"type": "array",

"items": {

"type": "object",

"properties": {

"url": {

"type": "string"

},

"url_mobile": {

"type": ["string", "null"]

},

"title": {

"type": "string"

},

"seendate": {

"type": "string",

"format": "date-time"

},

"socialimage": {

"type": "string"

},

"domain": {

"type": "string"

},

"language": {

"type": "string"

},

"sourcecountry": {

"type": "string"

},

"id": {

"type": "integer"

}

},

"required": [

"url",

"title",

"seendate",

"socialimage",

"domain",

"language",

"sourcecountry",

"id"

],

"additionalProperties": false

}

}

},

"required": ["articles"],

"additionalProperties": false

}

It is important to note that while GPT can provide a valuable starting point for JSON-Schema derivation, human intervention and validation are still crucial. The derived schema should be reviewed and refined by domain experts to ensure its accuracy and relevance to the specific context and business requirements.

Conclusion

The use of GPT for deriving a JSON-Schema from JSON content offers immense potential in the realm of data management and software development. It simplifies the process of schema creation, promotes data integrity, and enables deeper insights into data structures. As technology continues to evolve, harnessing the power of GPT becomes increasingly valuable in handling complex data challenges and driving innovation in the digital landscape.